Customer service is getting worse.

That's the finding from two recent reports. Companies are struggling to avoid service failures and keep their customers happy. But a third report and two top customer experience experts offer a glimmer of hope.

You can stand out from the competition by being average.

Forget wows. Stop worrying about delight. Don't fret over extraordinary. Just be consistently, perfectly, boringly average.

There are few caveats.

Your average has to be just a little better than the competition.

Your average has to be consistent.

More on those in a moment. But first, let's look at the state of customer service.

Is customer service getting worse?

Yes. Two prominent consumer studies show that customers perceive that service is getting worse.

The first is the American Customer Satisfaction Index (ACSI), which publishes a quarterly national customer satisfaction score for the United States. The composite ACSI score has declined or remained the same for seven quarters.

Image source: The American Customer Satisfaction Index

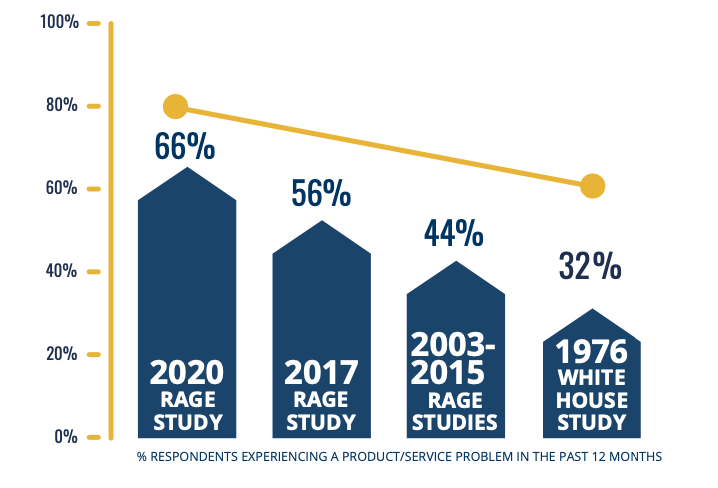

The second study is the 2020 National Customer Rage Study from Customer Care Measurement and Consulting (CCMC). It found that the number of households experiencing at least one problem over the past 12 months increased by 10 percentage points since the 2017 study.

Image source: Customer Care Measurement and Consulting

There is some hope.

I conducted a survey of 1,084 U.S. consumers in November, 2020 and asked them what type of service they receive most often. Surprisingly, 66.7 percent said they usually receive good service.

Get the Report

Download a copy of the report, “What type of service do customers receive most often?”

Should you try to delight every customer?

No. It’s impossible to delight every customer, and trying to do so can be costly. It might seem counterintuitive, but research shows that delighting customers has no significant benefits.

Customer experience expert, Matt Dixon, is the author of the classic business book, The Effortless Experience.

Dixon told me in an interview that he and his colleagues set out to research customer delight and discovered something unexpected. They found that companies were better off avoiding service failures.

"On average, most service interactions don't create loyalty at all. They create disloyalty."

That's because, try as they might, companies often fail to keep their promises.

Products don't work

Services fall short of expectations

Delivery is a logistical nightmare

CCMC's Customer Rage Study shows that companies continue to make life miserable for customers when something goes wrong:

2.9 contacts were needed to resolve a typical complaint.

58 percent of customers never got a resolution.

65 percent felt rage while trying to get a problem solved.

Despite the widespread use of surveys, many companies are doing a poor job identifying the problems that lead to customer rage.

For example, I recently experienced 18 points of frustration when ordering a table and barstools. It's likely the company only identified one.

There's got to be a better way.

How average service can win customers

Average really isn't the right word. Consistency is the key, as Shep Hyken points out in his excellent book, The Cult of the Customer. Hyken has a fantastic definition of customer amazement.

"Amazement is above average, but it's above average all of the time."

Hyken elaborates that companies win customers by being just a little above average, but doing it consistently. It's the consistency that captures customers' attention and eventually earns their trust.

You can hear more from Hyken in this interview.

There are a few things companies can do to be more consistent. The starting point is to create a customer service vision, which is a shared definition of outstanding service that gets everyone on the same page.

I researched customer-focused companies while writing The Service Culture Handbook. A clear vision was a common trait that set elite organizations apart.

The next step is to gather voice of customer feedback. Surveys can play a role, but there are many ways to gather customer feedback without a survey.

It's also important to identify reasons employees struggle to provide consistent customer service. I uncovered ten obstacles in my book, Getting Service Right.

Finally, it's important to understand that every customer is different.

Which brings us back to my study on the type of service customers receive most often. Most people felt they usually receive good service, which is service that meets their expectations.

But there was nuance to the responses. Perceptions changed by age group, gender, and geography. For example:

More women than men reported they receive outstanding service most often.

People in the western United States were more likely to report outstanding service.

Older customers felt they receive more outstanding service.

Get the Report

Download a copy of the report, “What type of service do customers receive most often?”

Take Action

Think about the companies you admire most.

They didn't earn their reputation by wowing customers once in awhile. Elite companies are known for dependably good experiences.

Let your competition flail about trying to delight customers. They'll inevitably fall short. You can stand out by being really good.